[转载]消亡中的数据科学

Why 85% data science projects fail

Before reading this, a quick disclaimer here. Apart from the statistics I mentioned, everything else is just my opinion. Take it with a huge grain of salt. Otherwise, enjoy!

The failure rate of data science projects is a well-documented challenge. According to Gartner, over 85% of data science projects fail.

A report from Dimensional Research indicated that only 4% of companies have succeeded in deploying ML models to production environment. I’ve recently discovered that the best results from Kaggle competitions don’t always translate to real-world applicability.

Kaggle are for playtime

In a recent competition I participated in, the winner cheated by incorporating real-world data from the internet into the provided dataset. The task was to predict the future USD-Naira exchange rate for the week from May 22, 2024, to June 4, 2024, based on historical data. The winner, who shall not be named, waited until those dates arrived, gathered real-world data, integrated it into the training set, and then engineered lag features (7 lags) and multi-step targets (13 steps) based on this updated dataset.

1 |

|

This is blatant cheating; of course, he’s going to win. How can you use real-world data to train your model, predict real-world outcomes, and then conveniently forget to mention it? You shouldn’t use actual future data to train your model to predict that same future data. Like, are you a time traveler? I’m not impressed😤. I’ve actually been following his work for a while, so I’m heartbroken. Read his solution if you think I’m exaggerating. If you’re going to cheat, do it with your full chest.

As for the second-place winner, the only reason he secured that position was because he trained his model on Kaggle. Kaggle models are democratized, so this doesn’t count for much. He probably just experimented with different random seeds on the platform until he surpassed the best score. Here is his solution.

He even acknowledged it himself. At least his approach is more grounded than the first one. He deserves to win; too bad he didn’t cheat.

This is not to say that we should start cheating ourselves. “If you can’t beat them, join them” does not apply in this case. The main issue with Kaggle competitions and most data science competitions alike is that they often skip the most crucial part of any data science project: sourcing and cleaning the dataset. Through my extensive research, I’ve discovered that in the real world, modeling is not nearly as critical. Let me explain.

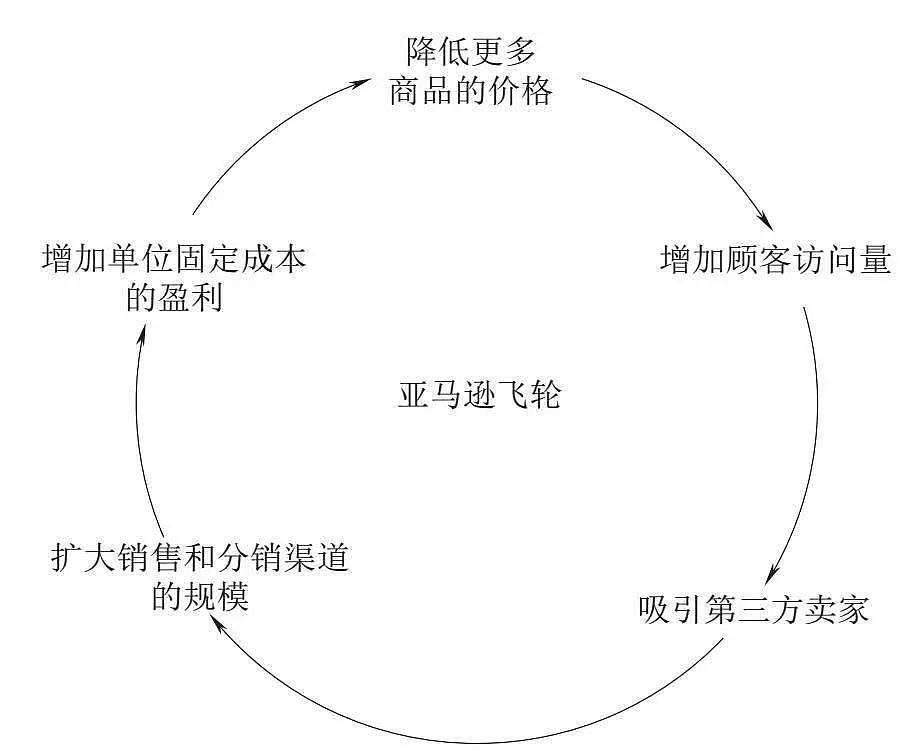

In practical scenarios, once a company has a cleaned dataset, they simply input it into an AutoML system, which then generates the best model for that dataset. Furthermore, most real-world problems involve classification and regression, for which gradient boosters are widely acknowledged as the best models. If you doubt what I’m saying, look it up!

Most studies are bulls**t

Excuse my language, but what is the point of doing a study to know which models are better for food demand forecasting for example, when we already know the best models for those problems. Its like running on a hamster wheel. Literally useless research. And trust me, those research papers that “prove” otherwise have collected the dataset in a specific way and even manipulated the dataset and show you specific visualizations in order to reaffirm their pre-determined bias. Most of those projects are not applicable in real life anyway.

I recently implemented a research paper that proves my point. I recommend you check it out. The researcher created lots of features that were based on the target variable; I mean, of course, a variable that was engineered from the target would have a high correlation with the target.

This is very cheating, because to predict on unseen data, how would you create those features when they were created based on the target you want to predict? But of course, as you would expect, he basically made predictions with a model that was trained with the data he was trying to predict. Cheating!

The way forward

So what is the “solulu” to the “delulu”? As for myself, from now henceforth, I’m going back to sharpening my data sourcing and data cleaning skills. I’m going back to dashboards and SQL. Even if I still use python, I’ll be focusing on the data preparation and analysis parts. Making future predictions is only reasonable when you have a clean dataset, and downloading a clean dataset from Google or any of these websites doesn’t reflect real-world data experience. Which is what I’ve been doing recently.

I also still believe in reimplementing research projects, even though most are heavily biased. I’ll have to do a better job at picking them, I guess.

So follow along on my journey to improving my data skills. I want to learn how data works in the real world, not how to win a competition by cheating.

My opinion is obviously biased here, plus I’m a pretty sore loser😭😒, so I’d like to hear your thoughts on the matter😏. Otherwise, stay tuned!